Deliberate AI Use

January 19, 2026

At the Agents Anonymous meetup, someone asked who still writes code by hand. I raised my hand. They joked we were losers. When I asked who’s still doing LeetCode, a couple people laughed and said no - if a company’s still asking it, they’re not on their list. Others grimaced. Here’s why I’m grinding LeetCode anyways.

AI has limits. Some people are running Gas Town setups with dozens of coordinated agents or Ralph Loops that keep feeding AI output back into itself until it dreams up the right answer. Cool experiments. But when you’re trying to get real work done, use deterministic tooling where possible and AI where traditional tooling can’t handle it. Otherwise you’re just wasting tokens. Use lint rules to surface violations instead of burning context on things grep could find.

There’s a middle ground. Instead of agents fighting in chaos, run it like an organization where each agent operates in its own git worktree. Tools like John Lindquist’s worktree-cli and Bearing let you spin up isolated branches for parallel work.

I’m not spinning up 30 agents for 30 worktrees. I’m staying in the loop - deciding when to background work, when to clear context, steering interactively using the patterns I’ve documented. Concurrency without contention. I can have a hundred things I’m working on without the overhead of switching between them or worrying about conflicts in real time. When conflicts arise later, I just run another Claude instance to integrate the branches.

Instead of hooks and mailboxes for agents to communicate or microservices of agents, Bearing uses a single daemonized process or cron job that periodically queries status using deterministic tools and updates a JSONL file. Claude Code hooks just query the static JSON file. Simple.

The difference between this and Gastown? I’m the orchestrator, not a swarm of AI agents cosplaying as Mad Max characters.

Sub-agents don’t magically solve the context limit problem. I watched an agent update a config file with the path to ffmpeg, then immediately spawn a sub-agent that started grepping the filesystem to find it. The information was right there. It just got lost in the handoff.

If you can’t reason through problems yourself, you can’t tell when AI messes up.

I’m Still Grinding LeetCode in 2026

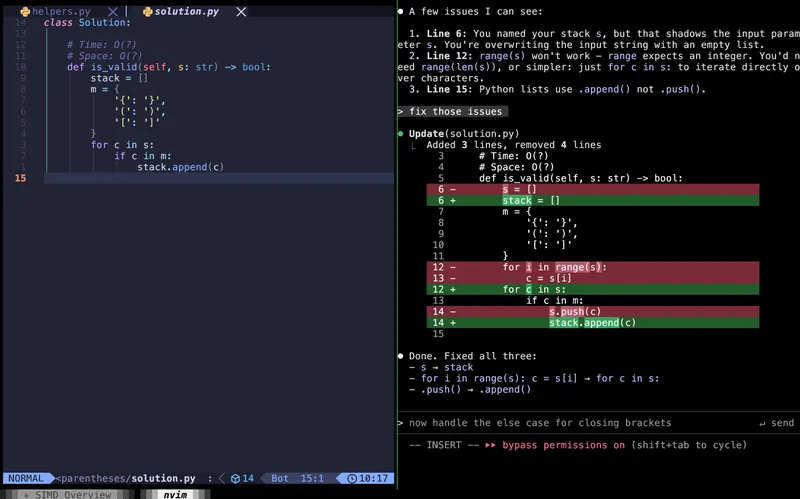

I solve LeetCode problems while vibing with Claude Code. We go back and forth. I write code, it spots issues, I fix them. The AI doesn’t solve it for me. I’m driving, it’s pair programming.

My workflow: I prompt an LLM with a problem number. It searches the web, reads the problem, creates the scaffolding and test cases. It knows the solutions (or can find them online and fact check if needed), writes the tests, spins up a blank file, runs the tests for me. Sometimes I even code with my voice - “create a stack called S, check if it’s empty and if not, push Y.”

I built LeetDreamer to generate AI-narrated solution videos and LeetDeeper (a collection of submodules for knowledge bases, tooling for scraping, and local solution runners with agentic problem generation from existing repositories). I seed the context with scraped content, then get instant AI coaching with video explanations.

The frontier is moving fast and it’s made of sand. But fundamentals will probably be around for a long time. Thinking will probably always matter.